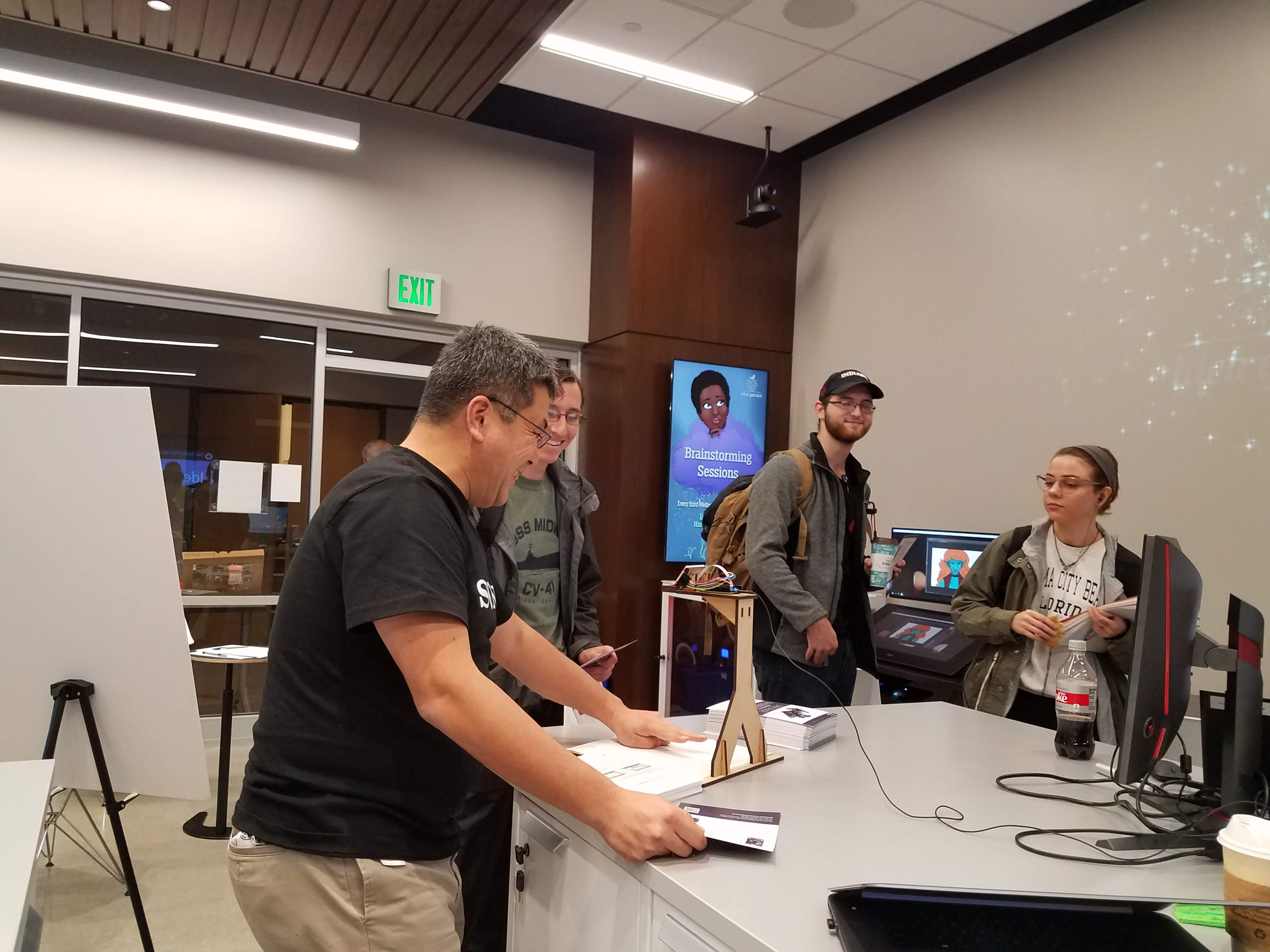

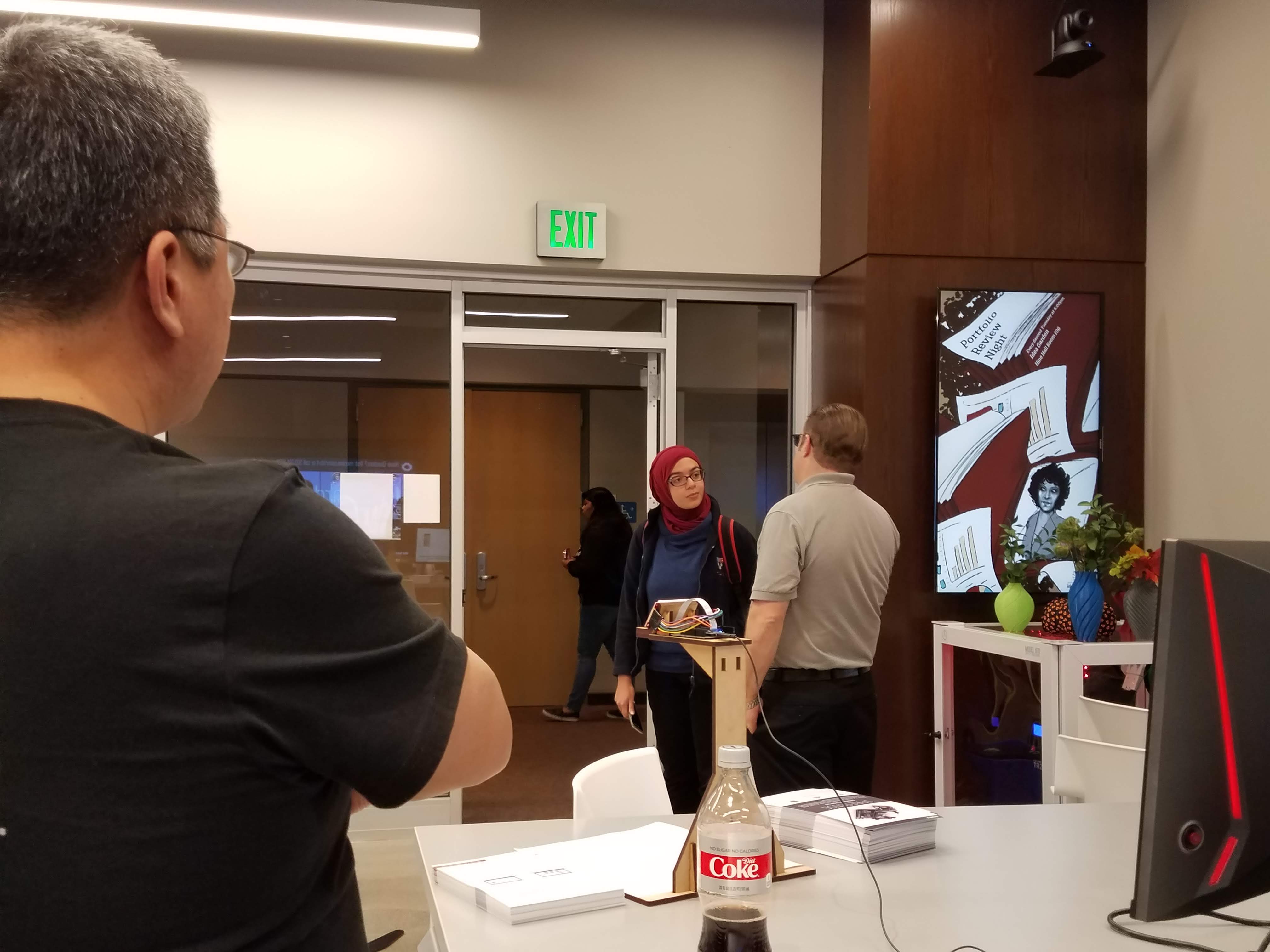

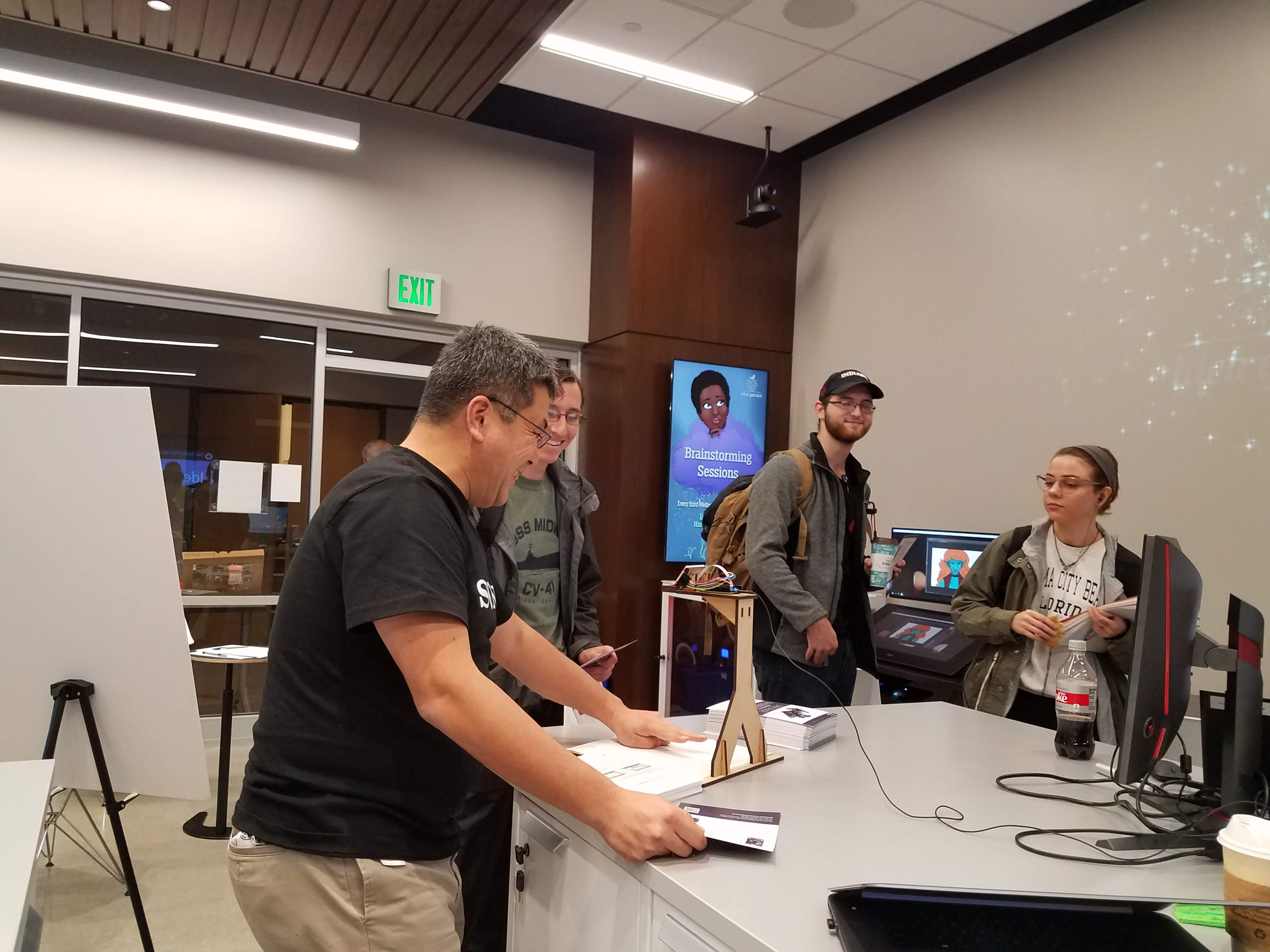

On October 24th we had the opportunity to have a few developers stop by from Sony with four demos of new technology that they have been developing recently that have yet to reach the market for consumers.

Description of the video:

Neural Network Console. Full Transcript available on YouTube.We also had the Catalyst Edit 360 VR on display in the space. This software uses a version of Sony Catalyst (their video editing software that came out after Sony Vegas) but utilizes a virtual reality headset to allow users to edit video that has been taken using a 360 camera from essentially inside of the footage using the headset. This allows for a better experience editing 360 footage to ensure that you get a high-quality result for viewers.

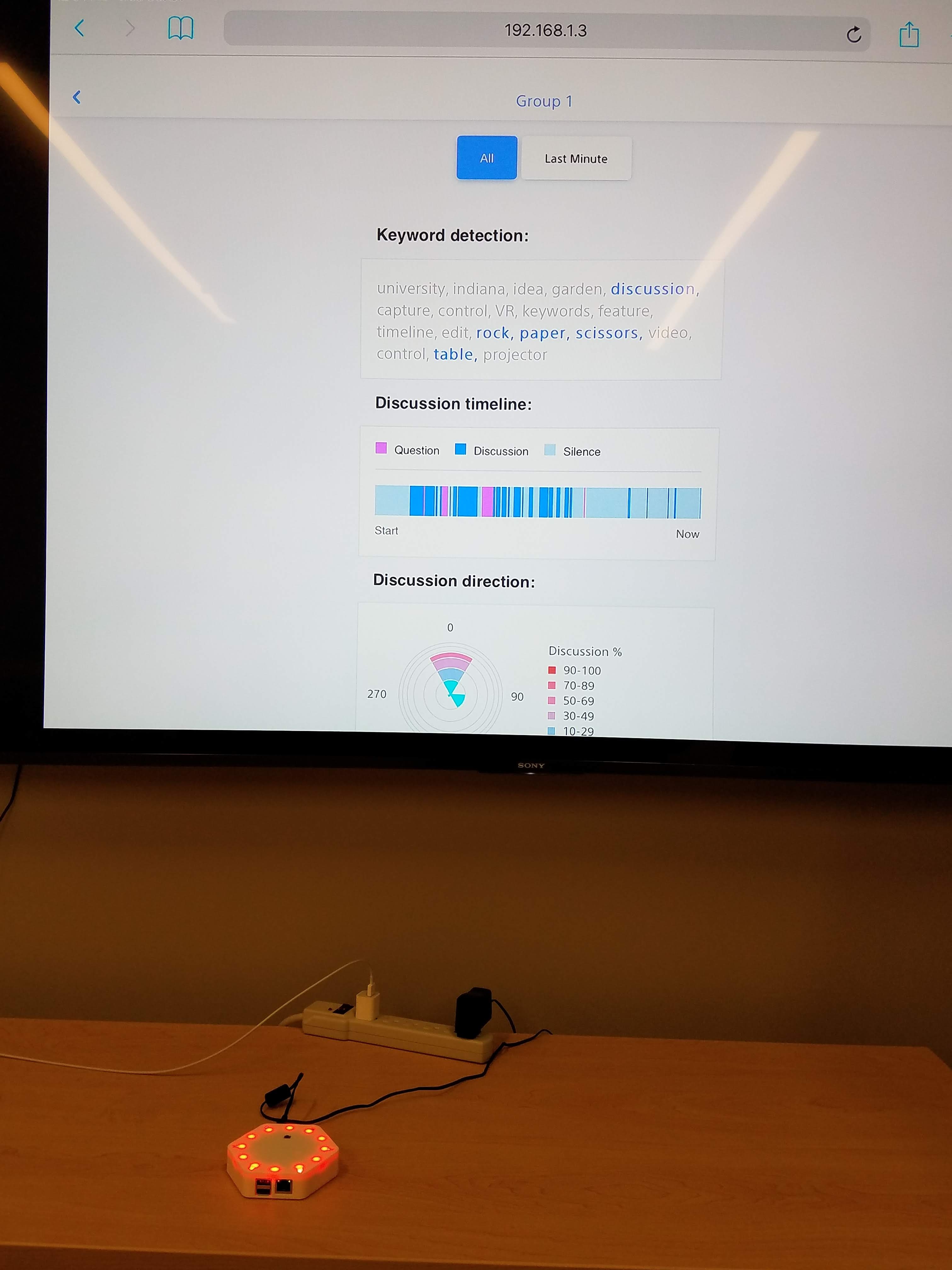

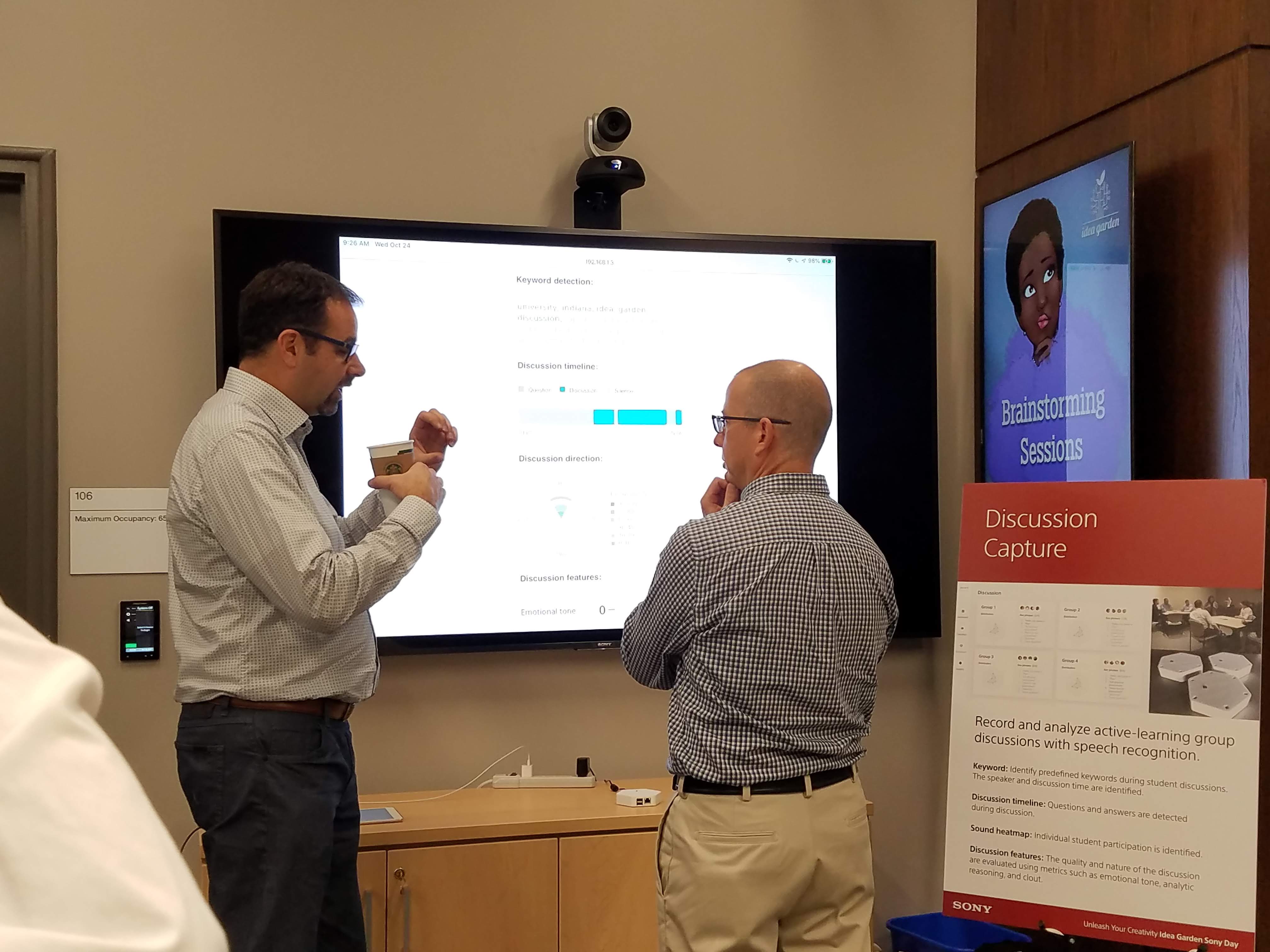

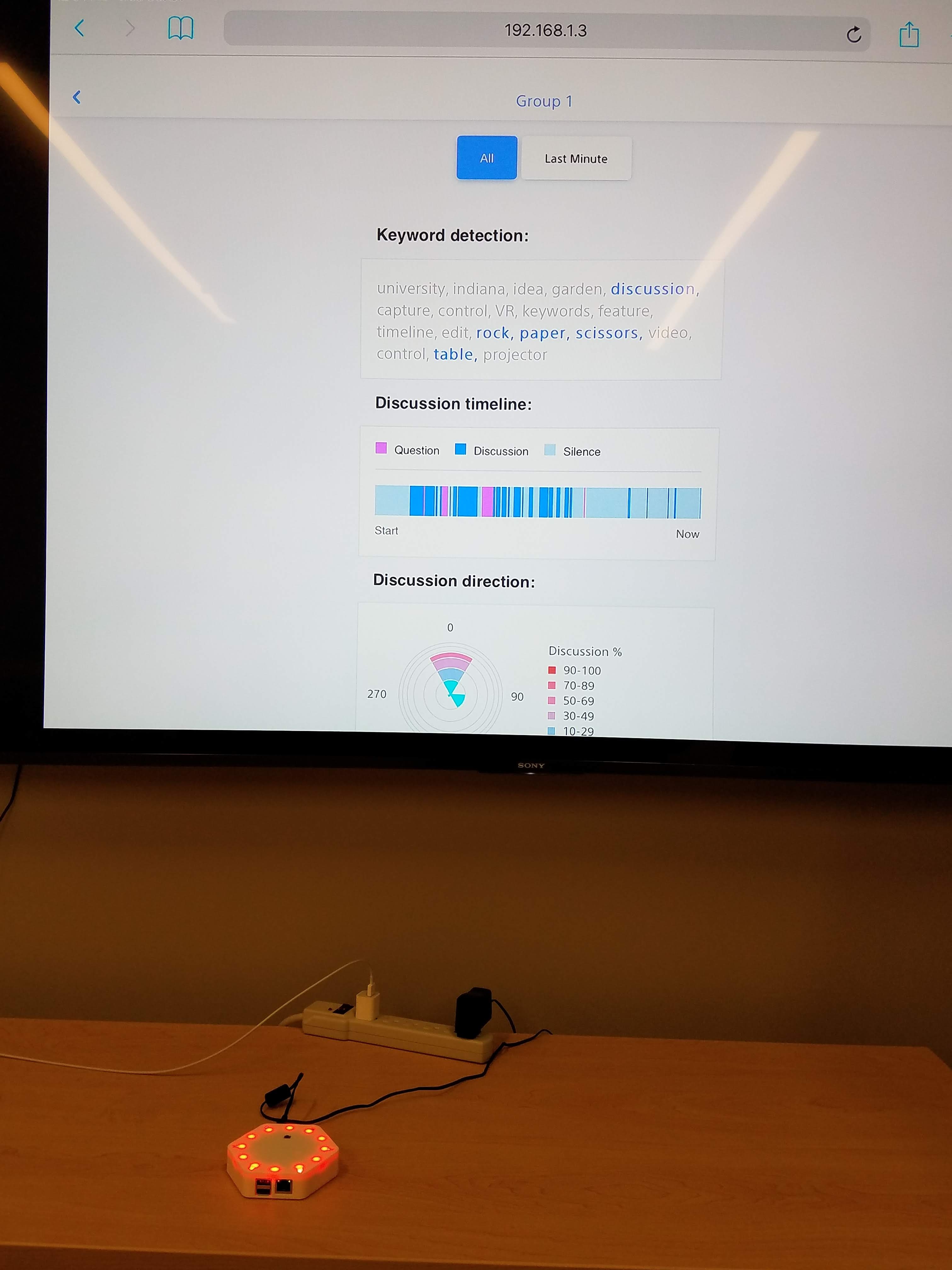

The third demo Sony brought with them were Discussion Capture Devices. These devices can be placed in the center of a group of people and they record and track the conversation happening within the group. This device is connected to a software that can be run on an iPad that then provides analytics on the details of the conversation. Able to determine how many questions people are asking, and how much time is spent being silent by a group. It can also determine keywords being used in a discussion and also detect how often those words are used in a discussion. This could easily allow a teacher to track discussion in small groups among their students, making it easier to pinpoint what topics and concepts the students might be confused about and making sure that the students are engaging in discussion with each other actively in the class. This is

Finally, we also were able to demo the Concept Prototype T. This projection based software creates an interactive space along three axes and utilizes gesture and object recognition to create an interactive workspace. This software can do a wide variety of tasks, it can recognize notes written on paper and create a digital copy of that note in the projection, it can recognize text and images that can be found in books to create a richer storytelling experience, it can be used with objects to create a more interactive experience in almost any situation. This technology really feels to be the most diverse in the applications that it can be used for, with applications for business, group work, and entertainment.

Description of the video:

Full transcript available on YouTube.Overall the biggest limitation on uses for any of these technologies is just what people can think of to do with them. Students who were able to attend the event all seemed extremely excited about the prospects of using this technology in the future and at having the chance to experience it first hand at the event. We want to thank Sony for giving us the time and resources to plan this event with them and show off this technology. If you missed out on this event, keep an eye open for the future so that you don’t miss the next event like this. Reading about the technology is one thing, but experiencing it first hand is a chance you shouldn’t miss out on next time, so keep your eyes peeled on our social media accounts and our website for exciting updates on upcoming events!